Accuracy in Machine Learning: How Much is Good Enough, and When Should You Use Other Metrics?

Photo by Vitolda Klein on Unsplash

I hope you are doing great, today we will discuss about one of the most commonly used and simplest evaluation for classificatoin problem called accuracy. Connected to accuracy we will also discuss what is the major drawback of using accuracy, when not to use accuracy and how can we solve the drawback of accuracy. So without any further delay let's get started.

What are evaluation metrics ?

Evaluation metrics are simply the parameters which helps us to develop an understanding of how well our machine learning model is performing while solving a classification or regression problem. The thing to keep in mind is that based on the type of problem the evaluation metrics are also of 2 types: classification metrics and regression metrics. In this blog post we will talk about accuracy as an classification metric and also about confusion matrix.

What is accuracy ?

Accuracy is a classification metric which simply gives us a numerical value representing a percantage of correctness of our model. Now in order to get the numerical value we simply take ratio of total number of correct predictions made by our model to total number of predictions made by our model and finally divide the resultant value by 100 to get percentage value.

How much accuracy can be considered as good accuracy ?

This is one of the most commonly asked interview question, and if you would answer that above 90% any accuracy value can be labeled as good accuracy then let me tell you my friend that you are wrong, infact even 95% accuracy is also not good and not even acceptable in certain scenarios.

So instead of simply making assumption that above 90% any accuracy value can be considered as good you should answer that interpretation of good accuracy is totally based on the problem we are solving.

95% accuracy is not acceptable💊

Let us assume that we have made a classification-based machine learning model that will help us to find out whether a person is having a brain tumor or not based on the clinical images we will feed into the model.

Let's say that we got 95% accuracy but even after getting this much accuracy, it is of no use in the real world because 95% accuracy means that out of 100 people, the chances are that 5 people will be there having brain tumor but our model may not be able to predict it, now since in this case the stakes are very high thus 95% accuracy will not be considered as good accuracy.

95% accuracy is good ⛈️

Let us assume that we have made a machine learning model that on basis of some parameters such as temperature, humidity and wind speed will give us the predicted date on which rain could happen, in this case even though instead of 95% if we got 80% accuracy that will be considered as good because the stakes are not as high as in the 1st example.

When not to use accuracy ?

Accuracy should not be used in case we have some major imbalance in our dataset, because in such kind of scenarios the accuracy will only give us a false satisfaction that our model is performing good but in reality our model migh be performing worse. To better understand this point let me give you an example.

Let say we are building a machine learning model that will hlep us to detect that whether a particular job posting is fake or not. Now it is obvious that the number of fake job postings will be less in number as compared to genuine job postings let say 10:90, and because of this our model will be biased towards the genuine job postings.

Due to biasness of model towards the genuine job postings during the prediction stage it will predict that every job posting is genuine but in reality only 90% of job postings are geuine thus our model accuracy will be 90%, but here the problem is that we still do not have much information about the false negatives or false positives.

Confusion matrix

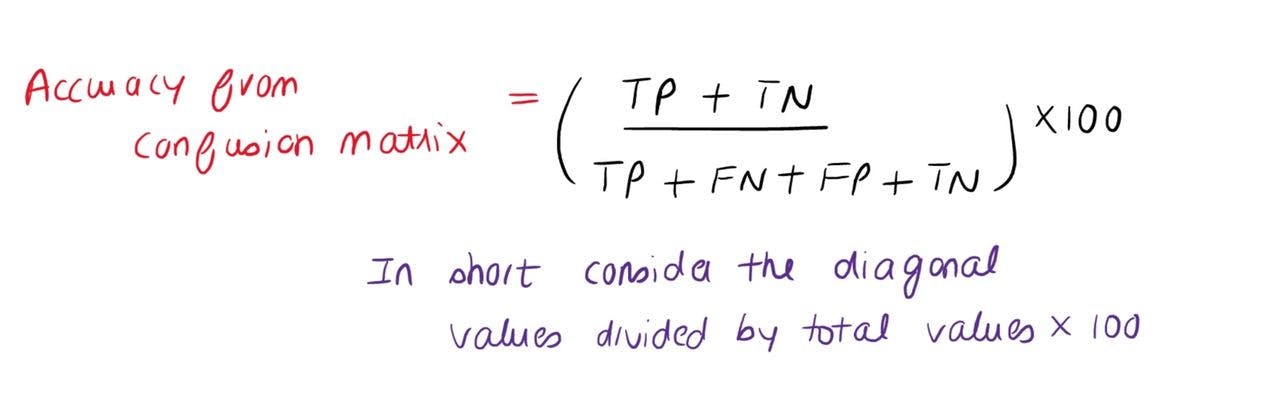

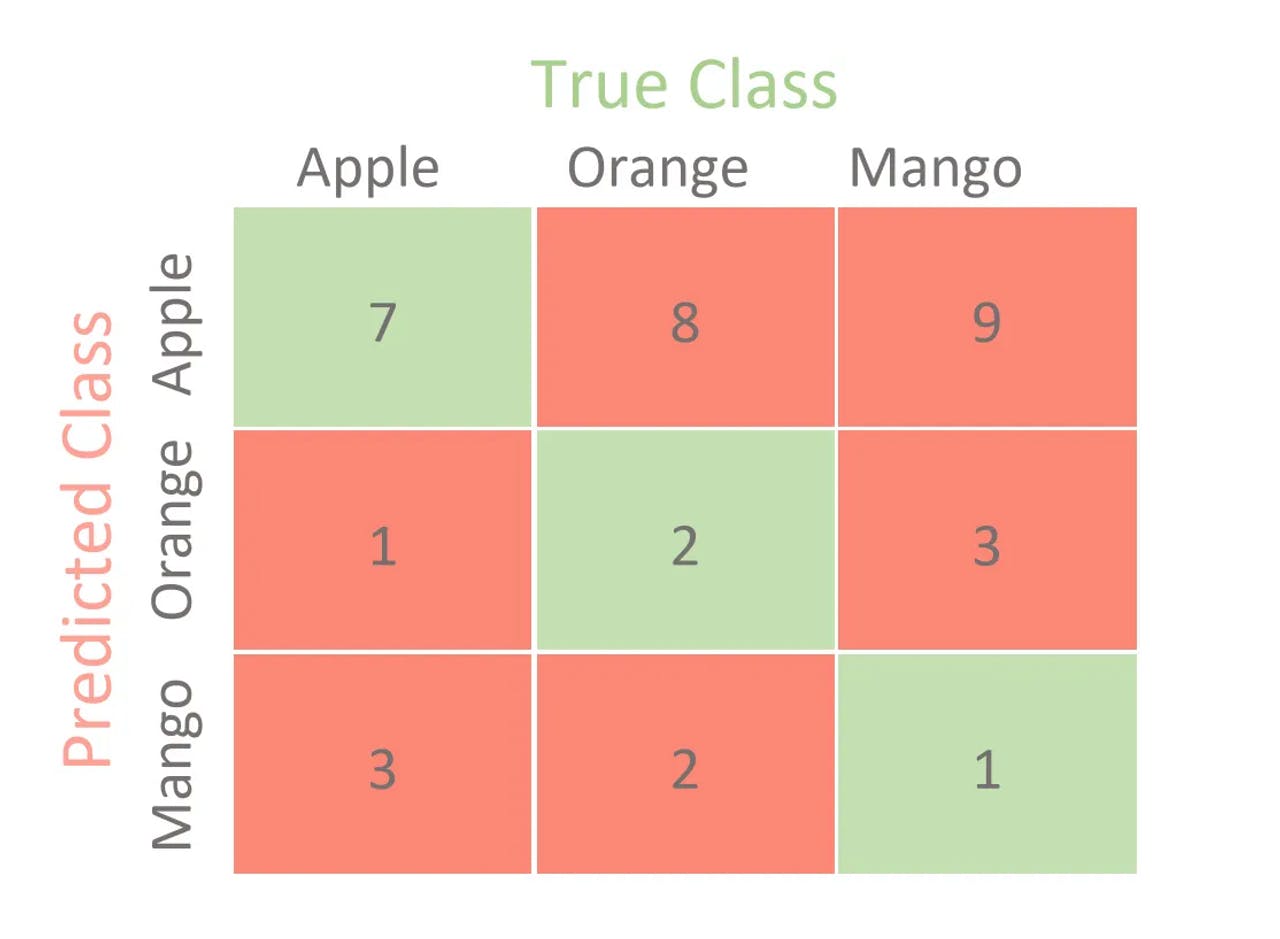

Confusion matrix is a classification metric which got introduced to cover the major drawback of using accuracy of not providing any information about the type of error. By using confusion matrix we not only can find the accuracy but it also tells us about the type and the percentage of correctness of our algorithm.

In confusion matrix there are around 4 terms which we need to keep in mind 👇🏽

True positive : It means our model is predicting that the output belongs to a particular class and it’s True

True negative : It means when our model is predicting that the output doesn’t belongs to a particular class and its True

False positive : It means when our model is predicting that the output belongs to a particular class but in reality that’s the output doesn’t belong to that class

False negative : It means when our model is predicting that the output doesn’t belongs to a particular class but in reality the output belong to that class.

Short note

I hope you good understanding of what are evaluation metrics, what is accuracy, how much accuracy can be considered as good, when not to use accuracy and what is confusion matrix, so if you liked this blog or have any suggestion kindly like this blog or leave a comment below it would mean a to me.